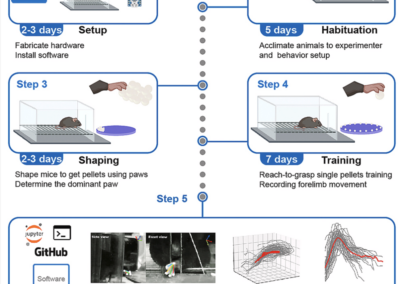

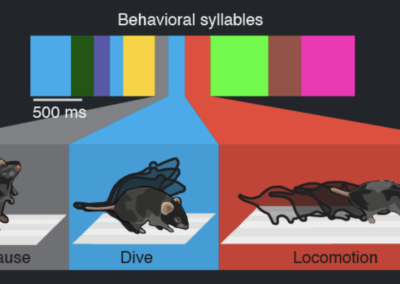

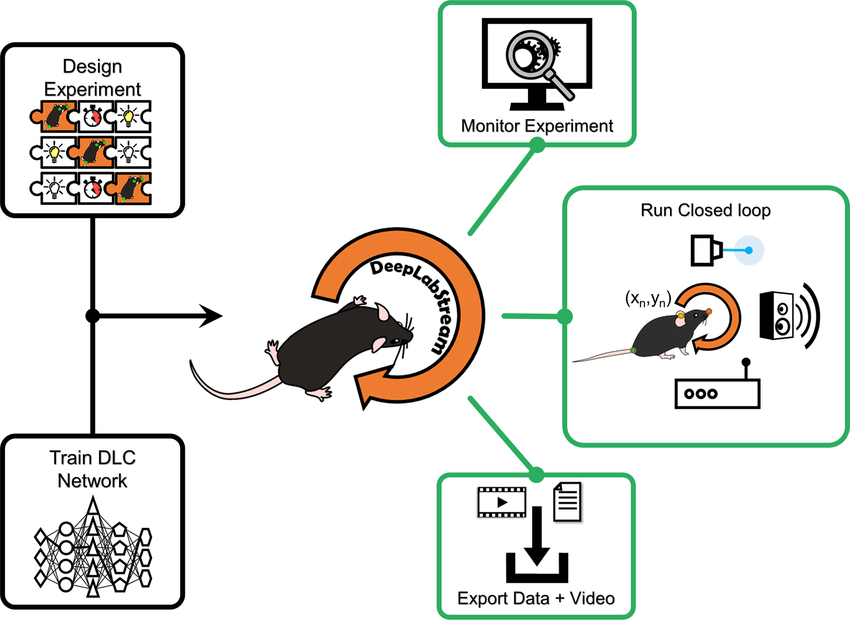

Video analysis of freely-moving animals has become a staple of animal neuroscience research. During the last few years, different software packages for extracting vital information from video recordings of animal behavior have become available. Packages like DeepLabCut or SLEAP, for example, combine user input and deep learning to create a locomotion tracker and save the statistics of the movement of the subject in real time. This information can be used to obtain perspectives of the behavior at a specific time during the task. The application of deep learning systems with electrophysiology techniques opens a new world of possibilities to the science community. Jens F. Schweihoff and colleagues have been working on a DeepLabCut add-on tool that integrates both methods into a software that is capable of actively “watching” the subject and switching task parameters, such as starting and stopping a trial, as the subject stands or moves in a specific position (e.g., facing a screen would start a trial). This tool has the potential to semi-automate the beginning and end of an experiment, and what happens in between, depending on the subject’s behavior. Since the publication of the original paper in 2020, Schweihoff et al. have expanded on the integration of DLStream, making it fully compatible with SiMBA and B-SOID for online classification of manually defined behavior. Additionally, with SiMBA the user can now integrate DLStream social classification of multiple animals in real time. The GitHub site includes a Wiki guide going over setup examples across some of the new software packages DLStream is compatible with. This research tool was created by your colleagues. Please acknowledge the Principal Investigator, cite the article in which the tool was described, and include an RRID in the Materials and Methods of your future publications. Project portal RRID:SCR_022359 Learn more about using DLStream from the paper. Get access to the software for DLStream from GitHub! Check out projects similar to this!DLStream

Read the Paper

DLStream on GitHub

Have questions? Send us an email!