Markerless Mouse Tracking for Social Experiments

Kartikeya Murari and colleagues at the University of Calgary published their video-based tool, Markerless Mouse Tracking, in an eNeuro publication in February 2024.

Behavior quantification of multiple interacting animals is often done by tools that are not accurate enough to omit human intervention. These tools typically require large bodies of data for training along with human correction following the learning period. Murari and colleagues sought to fix this issue by developing a markerless video-based tool for the tracking of mice in social settings.

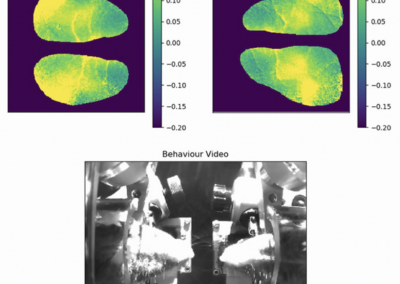

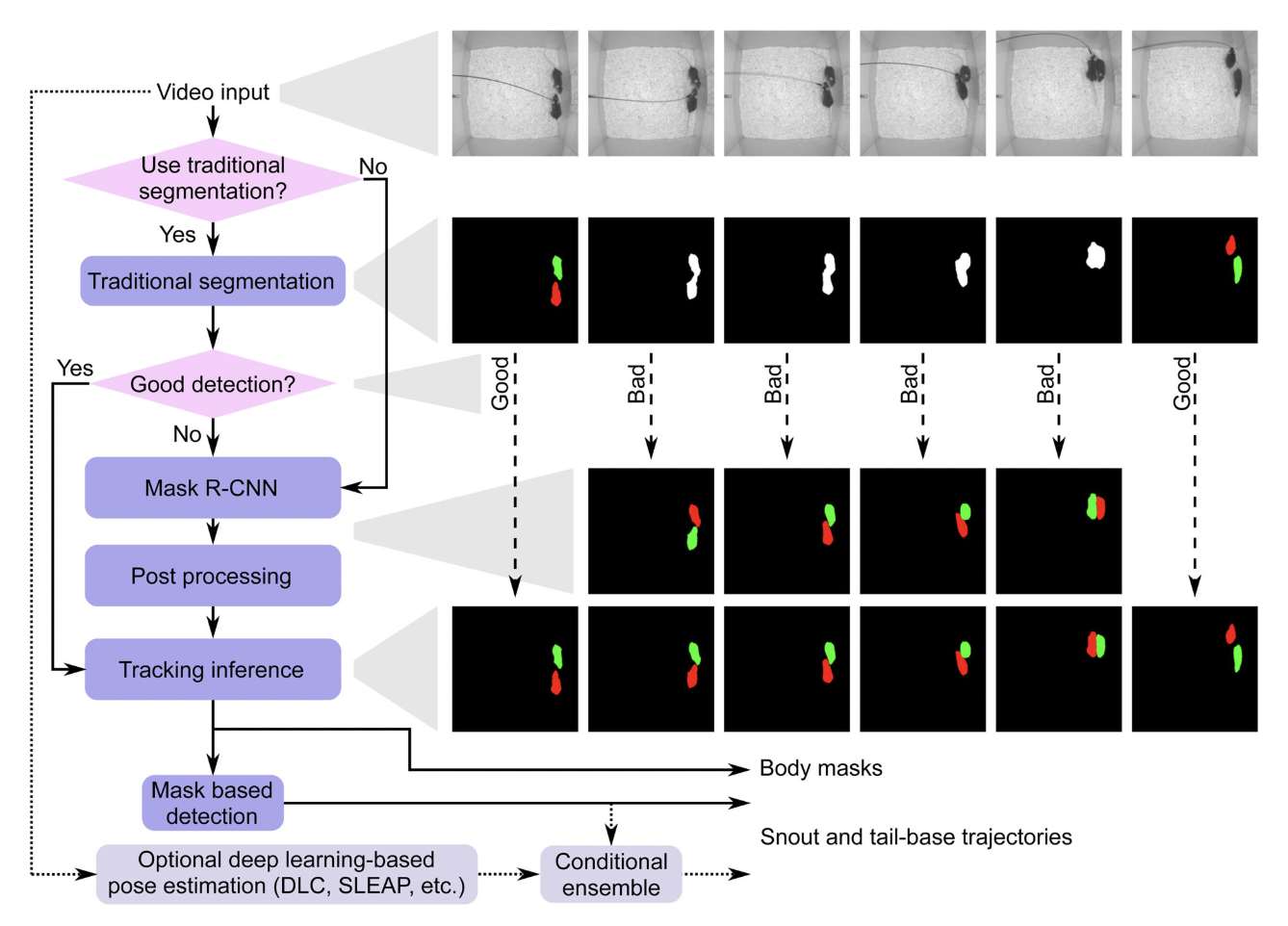

The Markerless Mouse Tracking tool requires a small set of images for training the deep-learning algorithm, and does not require human correction. The method still uses a standard tracking method with segmentation; however, it continues to process the data with a Mask R-CNN model to better segment multiple mice, reducing errors in segmentation. Post-processing is then done to further reduce errors from the Mask R-CNN stage. Tracking interference is then undergone to gather the paths of the individual animals by comparing the masks of each frame to each other. Lastly, a new approach coined ‘mask-based detection’ (MD) uses deep-learning techniques (DLC and SLEAP) to identify the snout and tail-base of each mouse throughout the video.

Compared to standard automated tracking that requires human correction, the Markerless Mouse Tracking model is 15% more accurate at tracking mice. Furthermore, there is a significantly lower, near 0, rate of switching identities of mice in social situations. This indicates that the model is far more effective for the proper tracking of multiple mice, a tool that is needed often in behavioral video-based studies. Requiring less human intervention and time is highly beneficial for cost-reduction, especially with large datasets.

This research tool was created by your colleagues. Please acknowledge the Principal Investigator, cite the article in which the tool was described, and include an RRID in the Materials and Methods of your future publications. RRID: SCR_025368

Special thanks to Abby St. Jean, a neuroscience undergraduate at American University, for providing this project summary.

Access the code from GitHub!

Check out the repository on GitHub.