Higher education is facing a number of challenges in the twenty-first century. Some of the top challenges include declining student enrollments, financial difficulties, and decreased state funding, to name a few (Wiley Education Services, n.d.). In a 2016 survey by the Babson Survey Research Group, two-thirds of academic leaders polled said that online education is integral to their strategic plan.

As a result, online enrollment trends are on the rise. A report from the Education Department’s Integrated Postsecondary Education Data System shows that the number of students taking at least one online class at either a public or private nonprofit institution increased from 30 percent in 2017 (Ginder, Kelly-Reid, & Mann, 2018) to 31.6 percent in 2018 (US Department of Education, 2019). The number of students enrolled exclusively online increased from 30.4 percent in 2017 (Ginder, Kelly-Reid, & Mann, 2018) to 32.5 percent in 2018 (US Department of Education, 2019). While the rate of growth seems to be decelerating when compared to previous years, online education continues to fuel the growth in overall enrollment in higher education (Lederman, 2019).

Despite the popularity of online learning for students, perceptions of quality still remain for academic leaders. In a 2015 survey, 29 percent of chief academic officers polled believed online classes are not as valuable as face to face classes (Allen, Seaman, Poulin, & Straut, 2016). The larger body of research, however, shows no significant difference in student learning outcomes when comparing the two modalities (Cavanaugh & Jacquemin, 2015; Kemp & Grieve, 2014). In fact, some studies show that online students may even outperform their face to face learner counterparts (Means, Toyoma, Murphy, Bakia, Jones, 2009). Regardless of the medium, quality course design is imperative to maximize student learning (Muller, Gradel, Deane, Forte, McCabe, Pickett, Piorkowski, Scalzo, & Sullivan, 2019). Furthermore, assessment of student learning is a critical competency of online educators (Martin, Kiran, Kumar, & Ritzhaupt, 2019).

In this literature review, I plan to explore innovations in the assessment of online learning, particularly with the use of big data and learning analytics. In an era of heightened accountability and decreasing student enrollments, Dringus (2012) argues that higher education is especially interested in big data and learning analytics as it relates to student learning online.

As a full-time faculty member in a fully online master’s degree program, I have seen first-hand how engaging and rewarding online teaching and learning can be. I am disheartened by the negative perceptions of online learning despite the body of research to support that learning outcomes are similar between the modes of delivery. With the proliferation of technology and web tools available to support online learning efforts, I am interested in the concepts of big data and learning analytics (LA). More specifically, I am interested in how these concepts can be leveraged to assess student learning in the online classroom.

Big Data in Business

Living in the digital age, technology is pervasive. People today have GPS devices on their smartphones, watches that can track and monitor their heart rates, and televisions that can predict viewing habits. A digital footprint is created every time you log on to or engage in a transaction on a digital device (Marr, 2017). This digital footprint is translated into a data set that can be sorted, quantified, and analyzed in order to understand the user’s behavior (Yupangco, 2017).

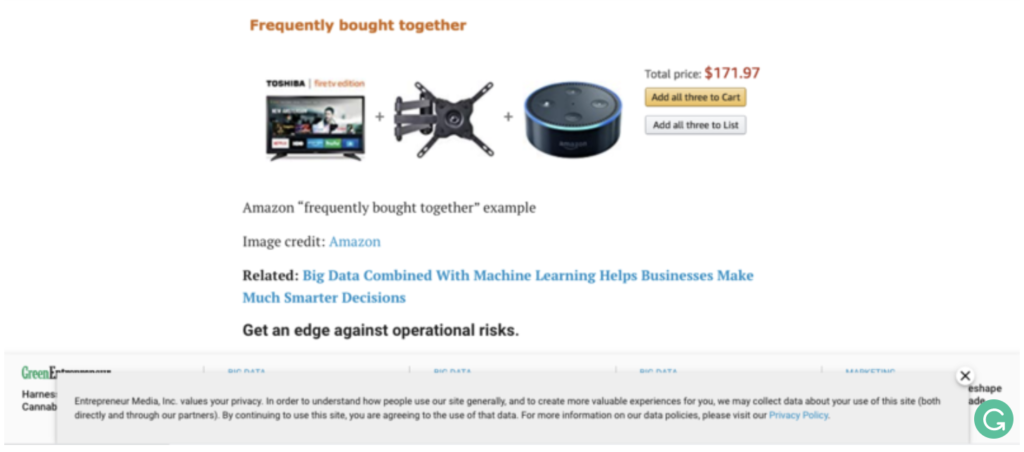

For example, in my preliminary search for information on big data, I explored some mainstream articles to better understand how the business sector might be using big data analytics to drive decision making. While reading an article on Entrepreneur.com, I took a screenshot to capture the image, as well as the pop-up, that emerged on my screen:

(Balkhi, 2019)

The timing of the pop-up message shown above was impeccable. Not only does this image showcase a practical example of how Amazon uses data to upsell customers based on the purchasing history of previous buyers, but the pop-up at the bottom of the screen also reads:

Entrepreneur Media, Inc. values your privacy. In order to understand how people use our site generally, and to create more valuable experiences for you, we may collect data about your use of this site (both directly and through our partners). By continuing to use this site, you are agreeing to the use of that data. For more information on our data policies, please visit our Privacy Policy (Entrepreneur, n.d.).

As technology permeates the lives of people around the world, there is a tremendous amount of data to be harvested (Watson, Wilson, Drew, & Thompson, 2017). In the corporate sector, this data is used by large companies to improve business practices and, ultimately, to bolster the bottom line.

In a 2017 article on Harvard Business Review online, the author shares more specific examples of how fortune 1000 businesses are using big data. As seen in the graphic below, the top three areas where businesses are realizing the most value from collecting and analyzing data are in decreasing expenses, fostering innovation, and launching new products or services (Bean, 2017).

(Bean, 2017)

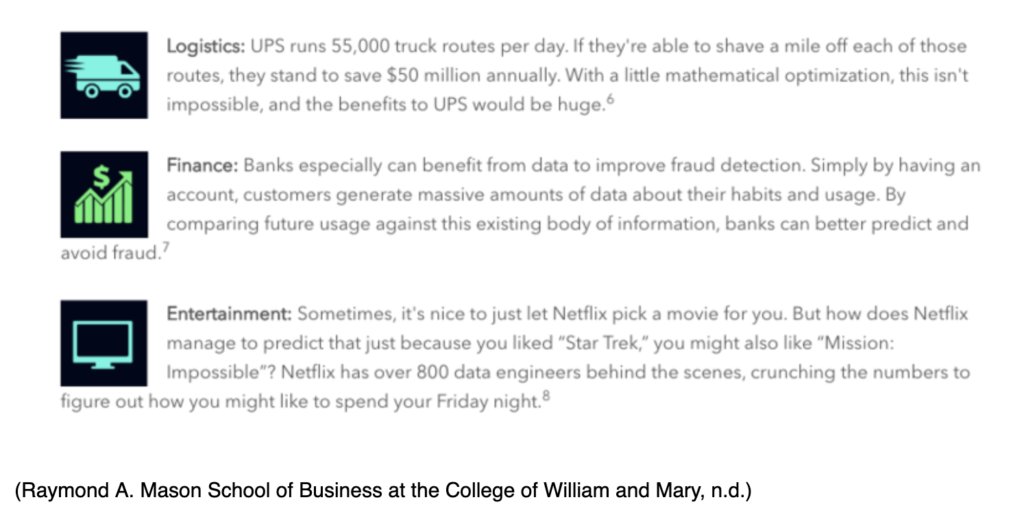

These concepts are further explained in a blog by the College of William and Mary’s Raymond A. Mason School of Business (n.d.). Here, the author shares some more practical examples of how large companies use big data to improve business practices. For instance, UPS could save $50 million a year by mapping routes that are just one mile shorter for each of their 55,000 routes traveled per day. Banks can track and monitor customer purchasing behavior in order to better detect fraudulent activity, and entertainment providers, like Netflix, can make predictions about which movies or shows a user might enjoy based on their current viewing preferences.

From improving business practices to enhancing the customer experience, big data has many applications for companies around the world. With this kind of success, it should come as no surprise that institutions of higher education are following suit (Watson, Wilson, Drew, & Thompson, 2017). Given the challenges that universities face with declining overall enrollments and financial difficulties (Wiley Education Services, n.d.), more and more universities are paying attention to big data and learning analytics. Learning analytics holds promise to help institutions find ways to increase student retention and improve student outcomes for a brighter future (Dietz-Uhler & Hurn, 2013).

Big Data and Learning Analytics in Education

The idea is simple yet potentially transformative: analytics provides a new model for college and university leaders to improve teaching, learning, organizational efficiency, and decision making and, as a consequence, serve as a foundation for systemic change (Long & Siemens, 2011)

Bart Collins, Clinical Professor at Purdue University, (n.d.) describes some of the more specific benefits that can be realized for institutions of higher education. At the student level, access to analytics helps provide more information about academic performance. Using the analogy of a Fitbit or Apple watch, Collins explains how data can allow the user to notice patterns in behavior and make changes based on the feedback, and “compare progress towards learning goals” (Collins, n.d. para. 3). At the instructor level, educators can monitor student behaviors to better understand how course materials are being utilized. Furthermore, instructors may even be able to understand how utilization of course materials relates to learning outcomes (as measured by grades). When patterns emerge, instructors can make changes to the course structure or materials in order to best meet student needs. Lastly, analytics can provide administrators with information about program performance and enrollments. For example, learning analytics may be able to provide answers to questions like, “which courses are students finding the most engaging?” or “are there student characteristics or engagement patterns that are associated with program retention?” (Collins, n.d., para. 8).

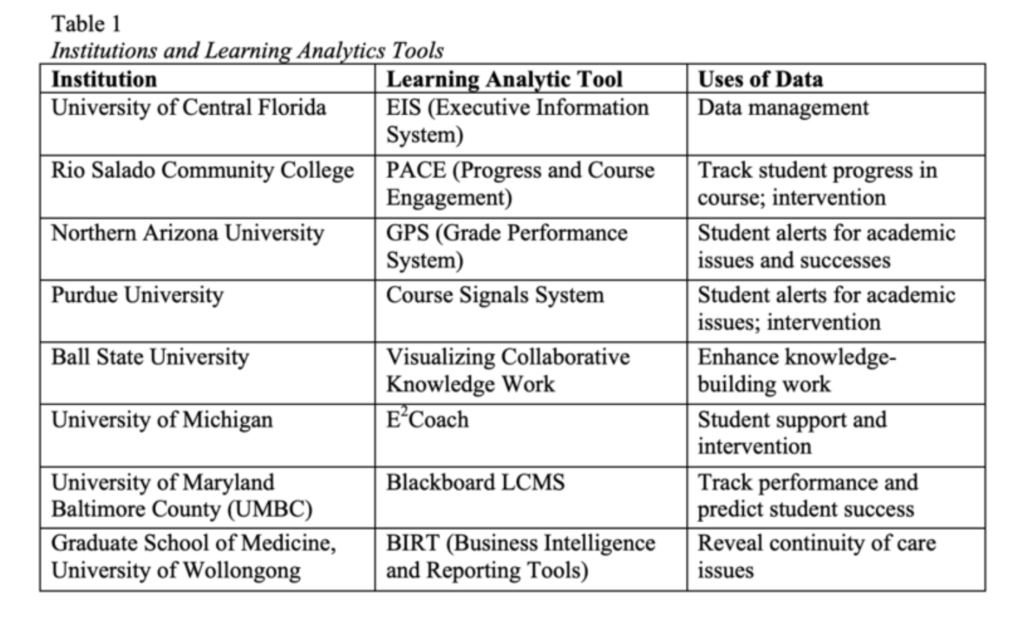

The table that follows depicts some broader examples of how learning analytics are being operationalized in higher education:

(SOLAR, 2019)

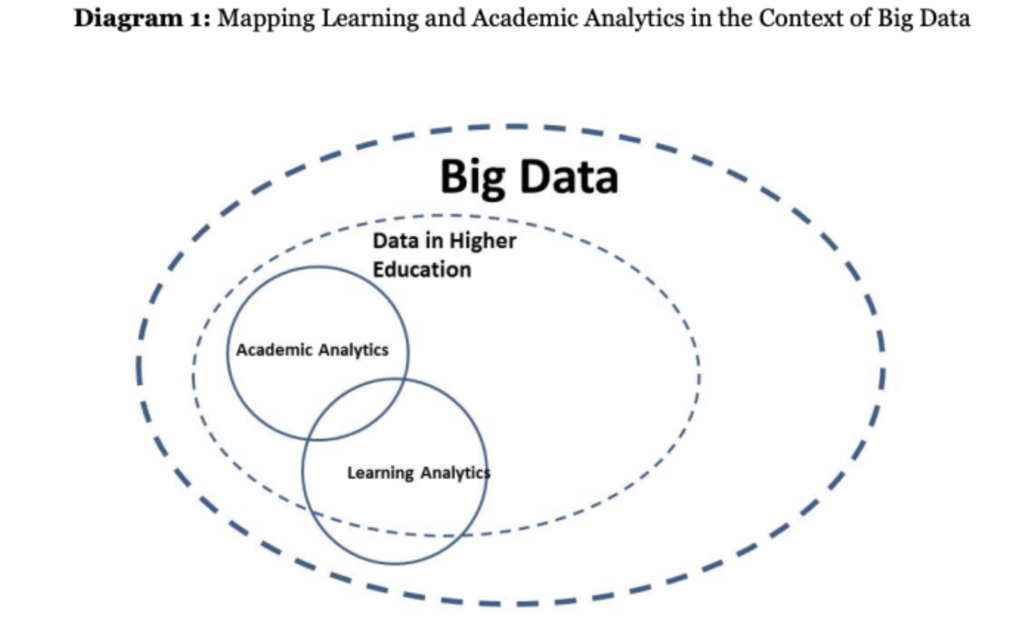

Learning analytics (LA) is another term used in the literature to describe what is done with the data collected from learning platforms. The Society for Learning Analytics Research defines learning analytics as “the measurement, collection, analysis, and reporting of data about learners and their contexts, for purposes of understanding and optimizing learning and the environments in which it occurs” (“about”, para. 1). The video shown above provides a brief introduction to the concept of LA. Some literature also references the notion of academic analytics, which is intended to describe the data that is generated within the institution, but outside of the learning space (Prinsloo, Archer, Barnes, Chetty, van Zyl, 2015). The diagram shown below provides an example of the relationship between big data, learning analytics, and academic analytics in the context of higher education.

(Prinsloo, Archer, Barnes, Chetty, van Zyl, 2015, p. 289)

While educational data mining and learning analytics are also closely related ideas, there are subtle differences. According to Bienkowski et. al (2012), EDM evaluates learning theories and guides teaching and learning practices, while LA generates applications that directly influence teaching and learning. Baker and Inventado (2014) reiterate that there are similarities and overlap between the concepts of EDM and LA, and add that the differences are mostly the result of the interests, or areas of focus, of specific researchers as opposed to a true philosophical difference.

Dietz-Uhler & Hurn (2013) suggest that “learning analytics can help faculty identify at-risk learners and provide interventions, transform pedagogical approaches, and help students gain insight into their own learning.” (p. 23).

Assessment in Online Learning

There has been a strong focus on assessment and assessment practices in higher education in the last two decades (Reeves, 2000). Reeves (2000) posits that the increased focus may be related to issues of grade inflation, academic integrity, and “calls for greater accountability with respect to educational outcomes” in higher education (Reeves, 2000, p. 101).

Assessment of student learning outcomes is a critical aspect of intelligent course design, regardless of the mode of delivery. Best practices for learning assessment in the traditional classroom also apply to online learning (Muller, Gradel, Deane, Forte, McCabe, Pickett, Piorkowski, Scalzo, & Sullivan, 2019). In a 2019 article identifying the key competencies of award-winning online faculty, seven competencies were identified related to the assessment of student learning in the online modality:

- Design assessment for courses

- Provide timely, meaningful, and consistent feedback

- Evaluate and revise assessments in courses

- Provide individual and group feedback

- Use student data to guide the feedback process

- Provide information to students about their progress

- Provide feedback in written, audio, and video forms

(Martin, Budhrani, Kumar, & Ritzhaupt, 2019, p. 198)

Muller et al. (2019) reports that both students and faculty agree that online learning should include a range of assessments, such as projects, peer evaluations, and timed tests. Using a wide range of assessments is especially important in higher education, where the focus not only includes specific knowledge and skills but also includes developing habits of mind, like problem-solving or ethical reasoning (Reeves, 2000). In order to maximize student learning, timeliness and quality of the feedback are critical for each of these assignments.

While traditional assessments, like quizzes or tests, have their drawbacks, these methods may be used in online learning for reasons related to ease and objectivity of grading (Dikli, 2003). Traditional assessments are thought to primarily assess lower-order thinking skills, like recall (Quansah, 2018); however, this may still serve a valuable purpose in the learning process for students.

Despite the ease of grading that comes with multiple-choice tests, some argue that authentic assessments, like case studies or collaborative projects, may be a superior means for assessing learner mastery. Authentic assessments often require higher-order thinking skills, like synthesis or creation. This type of engagement may help students to succeed in online courses (Hulleman, Schrager, Bodmann, & Harackiewicz, 2010). Reeves (2000) asserts that “online learning environments provide enormous potential for enhancing the quality of academic assessment regardless of whether students are on-campus or at a distance, but that such improvements will require new approaches to assessment…” (p. 102).

In an online course that uses best practice in learning design, learning is often student-centered. Students are actively constructing their own ideas and testing their ideas in the context of the online classroom. Additionally, students may also be able to exercise choice related to lesson content as well as how they are assessed (Muller et al, 2019). With the evolution of online learning and the capabilities of many points of data through the learning management systems, learning analytics may allow for new means for assessment.

Case Study: Applications of LA in Online Learning

As we look for ways to leverage learning analytics in assessment practices in online learning, it is pragmatic to define what, exactly, is being assessed. Do assessments provide measures of student learning or for student learning (Martin & Ndoye, 2016)? Williams (2017) explains that alternative assessment provides formative feedback that is used for learning as opposed to summative feedback of learning. Is it possible that alternative (learner-centered) assessments can serve both purposes? In the following case study, Martin and Ndoye (2016) provide practical examples of how learning analytics can be leveraged in online, learner-centered assessment, and the impact it has on teaching, learning, and potential course design.

Online courses most often employ four types of assessment: 1) comprehension-type assessments, i.e. quizzes or tests, 2) discussion boards, 3) reflection-focused assessments, i.e. essays or research papers, and 4) project-based assessments, i.e. presentations or products. Table 1 on page 5 in the study linked above provides examples of LA techniques, along with the type of data that can be obtained from each form of assessment. It is important to note that many of the assessments seen in the table can produce quantitative or qualitative measures depending upon how they are set up in the analytics program being used.

Course assignments in this case study included a mix of quizzes and projects and were administered over the course of 15-weeks. For the purpose of this study, the authors used two tools to measure the quantitative and the qualitative data generated by course assignments: ManyEyes for qualitative data (introductory video seen below) and Tableau for quantitative data. Both ManyEyes and Tableau are visual analysis tools that allow the user to make new connections from given a set of data.

(IBM, 2011)

The data collected from comprehension assessments in this study (quizzes) included time spent on the quiz, quiz score and the number of times the quiz was accessed. These data points were tracked and plotted visually. Visual depictions of data provide instructors with a better understanding of student behaviors for the purpose of providing individualized feedback for improvement. For example, the authors observed relationships between quiz scores, the number of times accessed, and total time spent on the quiz during each visit. As a result, this information could be used to differentiate instruction for students by providing more targeted feedback for improvement.

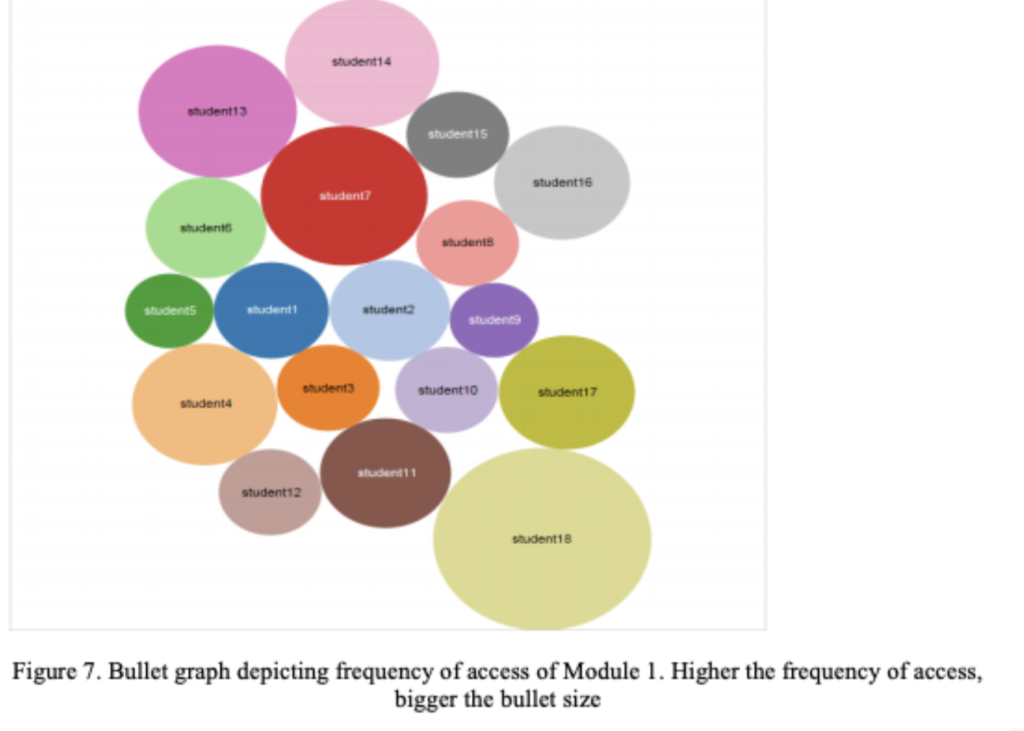

In the project-based learning assessment, data points included time spent in the learning module and project score based on a grading rubric. The authors used a bullet graph to depict ‘frequency of access’ to the project. In the image seen below, the larger circles depict students who accessed the project more frequently, while the smaller circles depict students who accessed the project less frequently. By comparing ‘frequency of access’ with ‘assignment grade’, instructors may discover emerging patterns; allowing instructors to better understand the relationship between effort and learning. Does the amount of time spent on a project correlate to a student’s final grade on that assignment? Furthermore, this information could be useful for evaluating class participation.

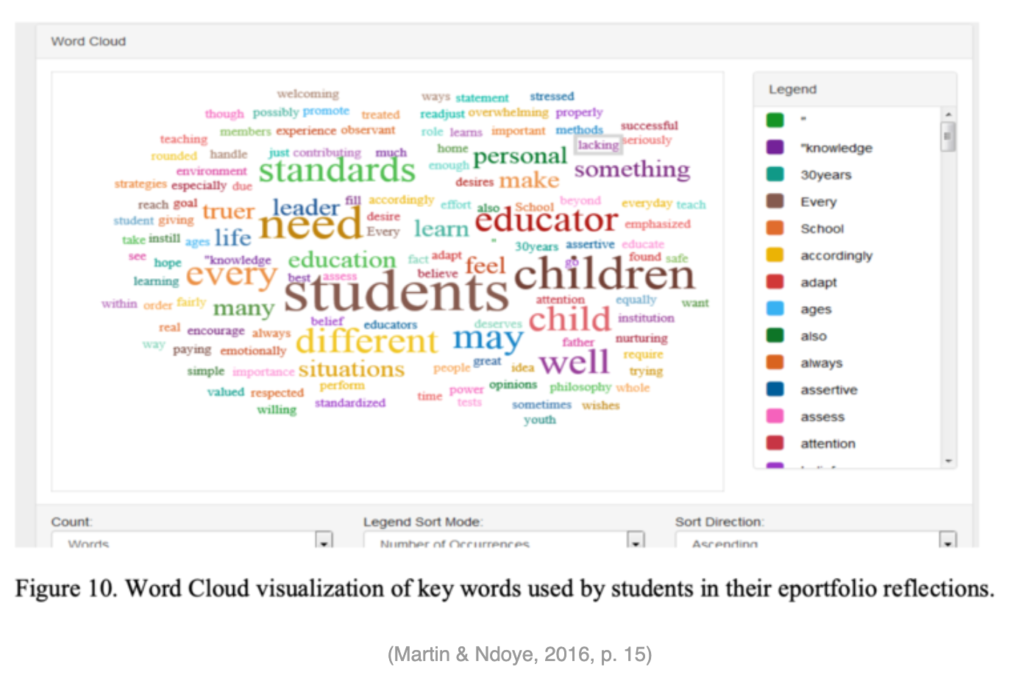

For reflection focused assessments, researchers entered learners’ written reflections into the program, ManyEyes.

In this case, instructors could identify keywords or phrases used most frequently in students’ writing. Instructors may find this useful if there are specific words, phrases, or concepts that should be part of a student’s writing. It is also possible to identify broader themes, or topics, in students’ writing, however, additional software may be needed.

When it comes to discussion forums, the same technique could also be applied. Here again, the program looks for patterns in words or phrases in an effort to pick up on common themes. For example, the authors describe how this technique was used for an introductory discussion post where students were asked to share some information about themselves, as well as what they hoped to learn in the course. By entering this information into ManyEyes, the instructor could see words that were most frequently utilized. In this study, the authors noticed that ‘family’ or words closely related to ‘family’, i.e. ‘sister’, ‘brother’ were most common. The identification of certain keywords may provide some context related to students’ backgrounds that may also play a role in student learning online.

Overall, this case study serves as an example of how instructors can use the data available in online courses to positively influence student learning in real-time. Moreover, data analysis may be useful for instructors to better understand student behavior, provide more individualized feedback and instruction, and to “benchmark students scores and practices” to better understand overall course performance (p.8). The information gleaned from the data can be used to modify teaching and learning strategies, but may also be used to predict and identify students who may be at risk and in need of intervention.

Limitations and Concerns

While there are many reported benefits of the use of learning analytics to predict or measure student learning online, there are also limitations. Dringus (2012) explains that learning management systems produce vast amounts of data that are not always easily accessible or easy to interpret. “The lack of transparency and undefined data in the online course may result in poor decision-making about student progress and performance” (Dringus, 2012, p. 88). Furthermore, Dringus (2012) emphasizes the importance of “getting the right data and getting the data right” (p. 98). Failure to do so (especially within the research) may lead to faulty conclusions by decision-makers and stakeholder groups, such as universities or accrediting agencies.

Campbell, DeBlois, and Oblinger (2007) describe some of the common issues and concerns of faculty and administrators in higher education related to the adoption of learning analytics. First and foremost are the issues of privacy and sharing. Students and faculty may feel uneasy about their actions or behaviors being tracked and monitored online. Questions also remain as to who has access to the data, where is it stored, and how is it shared. Moreover, are colleges and universities morally responsible for using the data collected to increase student success (Campbell et al., 2007, Dietz-Uhler & Hurn, 2013)?

Another concern is the issue of ‘profiling’. Campbell et al. (2007) explain that learning analytics is often used to identify high performing students as well as those who are underperforming. The established profiles may be used to “prompt interventions or to predict student success” (p.54). Dietz-Uhler and Hurn (2013) acknowledge that faculty already have expectations for their students, but the learning analytics may lead to a new “set of data-driven expectations” (p. 24). Furthermore, the data does not take into account any of the social factors that may influence student performance, i.e. health status, home life, etc. (Campbell et al., 2007).

Finally, the question remains as to what is truly being measured? Can data points like the number of log-ins, time spent on a quiz, or other such data truly measure student learning (Watters, 2012 as cited by Dietz-Uhler & Hurn, 2013, p. 24)?

Opportunities for the Future

Ferguson, Brasher, Clow, Griffiths, and Drachsler (2016) outline a vision for the future of education and the ways in which learning analytics may be leveraged to enhance student learning. By 2025, the authors envision that all learning tools will be equipped with sensors, and classrooms will have cameras with facial recognition software to track and monitor students as they learn. Wearables that track data, such as the Apple Watch or Fitbit, will be able to track more sophisticated physical information that can be used to support learning, i.e. posture, stress, glucose levels. Learning analytics will be a more widely accepted practice, and data will be stored in an open system that negates the issues of data ownership or stewardship. By 2025, large datasets will have been established, allowing for more reliable predictions of learner outcomes. Students will have become accustomed to working with learning management systems that are guided by learning analytics, and this ultimately leads to a dramatic shift in the role of educators.

Ferguson et al. (2016) further assert that government and institutional policies will likely dictate the future of learning analytics in education. How learning analytics are utilized will “be greatly shaped by the regulatory framework which is established, the investment decisions made, the infrastructure and specifications which are promoted, and the educational discourse” (p. 1).

As we look to the future, learning analytics holds promise for constituency groups across campus. Avella, Kebritchi, Nunn, and Kanai (2016) conclude that

Data analysis provides educational stakeholders a comprehensive overview of the performance of the institution, curriculum, instructors, students, and post-educational employment outlooks. It also provides scholars and researchers with needed information to identify gaps between education and industry so that educators and institutions can overcome these deficiencies in course offerings. More important, the ability of big data to provide these revelations can help the field of education make significant progress to improve learning processes (p. 21).

References

Avella, J.T., Kebritchi, M., Nunn, S.G., & Kanai, T. (2016). Learning analytics methods, benefits, and challenges in higher education: A systematic literature review. Online Learning, 20(2). https://onlinelearningconsortium.org/jaln_full_issue/online-learning-special-issue-learning-analytics/

Balkhi, S. (2019, January 19). How companies are using big data to boost sales, and how you can do the same. Entrepreneur. https://www.entrepreneur.com/article/325923

Babson Survey Research Group. (2016). Online Report Card. Tracking Online Education in the United States [infographic]. http://www.onlinelearningsurvey.com/reports/2015SurveyInfo.pdf

Bean, R. (2017, March 28). How companies say they’re using big data. Harvard Business Review. https://hbr.org/2017/04/how-companies-say-theyre-using-big-data

Bienkowski, M., Feng, M., & Means, B. (2012). Enhancing teaching and learning through educational data mining and learning analytics: An issue brief. U.S. Department of Education, Office of Educational Technology. Washington, D.C. http://www.ed.gov/technology.

Campbell, J.P., DeBlois, P.B., & Oblinger, D.G. (2007). Academic analytics. A new tool for a new era, EDUCAUSE Review 42(4) 40-57.

Collins, B. (n.d.). Harnessing the Potential of Learning Analytics Across the University. Wiley Education Services. https://edservices.wiley.com/potential-for-higher-education-learning-analytics/

Dickli, S. (2003). Assessment at a distance: Traditional vs. Alternative assessments. The Turkish Online Journal of Educational Technology, 2(3), 13-19.

Dietz-Uhler & Janet E. Hurn (2013). Using learning analytics to predict (and improve) student success: A Faculty perspective. Journal of Interactive Online Learning,12(1), 17-26.

Dringus, L. P. (2012). Learning Analytics Considered Harmful. Journal of Asynchronous Learning Networks, 16(3), 87–100.

Ferguson, R., Brasher, A., Clow, D., Griffiths, D., & Drachsler, H. (2016, April 28). Learning Analytics: Visions of the Future. 6th International Learning Analytics and Knowledge (LAK) Conference, Edinburgh, Scotland. http://oro.open.ac.uk/45312/

Ginder, S.A., Kelly-Reid, J.E., and Mann, F.B. (2018). Enrollment and Employees in Postsecondary Institutions, Fall 2017; and Financial Statistics and Academic Libraries, Fiscal Year 2017: First Look(Provisional Data). U.S. Department of Education. Washington, DC: National Center for Education Statistics. http://nces.ed.gov/pubsearch.

Hulleman, C., Schrager, S., Bodmann, S., & Harackiewicz, J. (2010). A meta-analytic review of achievement goal measures: Different labels for the same constructs or different constructs with similar labels? Psychological Bulletin, 136(3), 422. DOI: 10.1037/a0018947

IBM. (2011, April 5). Many Eyes. [Video]. YouTube. https://www.youtube.com/watch?v=PH_-ZeB4_GE

Lederman, D. (2019). Online Enrollments Grow, but Pace Slows. Inside Higher Education. https://www.insidehighered.com/digital-learning/article/2019/12/11/more-students-study-online-rate-growth-slowed-2018

Long, P., & Siemens, G. (2011). Penetrating the fog: Analytics in learning and education. Educause Review, 46(5), 30–40. https://er.educause.edu/articles/2011/9/penetrating-the-fog-analytics-in-learning-and-education

Marr, B. (2017, March 14). The complete beginner’s guide to big data everyone can understand. Forbes. https://www.forbes.com/sites/bernardmarr/2017/03/14/the-complete-beginners-guide-to-big-data-in-2017/#298012a97365

Martin, F., Budhrani, K., Kumar, S. & Ritzhaupt, A. (2019). Award-winning faculty online teaching practices: Roles and competencies. Online Learning, 23(1), 184-205.

Muller, K., Gradel, K., Forte, M., McCabe, R., Pickett, A. M., Piorkowski, R., Scalzo, K., & Sullivan, R. (n.d.). Assessing Student Learning in the Online Modality. 32.

Pappas, C. (2014, July 24). Big data in eLearning: The future of eLearning industry. eLearning Industry. https://elearningindustry.com/big-data-in-elearning-future-of-elearning-industry

Prinsloo, P. Archer, E., Barnes, G., Chetty, Y., van Zyl, D. (2015). Big(ger) data as better data in open distance learning. International Review of Research in Open and Distributed Learning, 16(1), 284-306.

Raymond A. Mason School of Business at the College of William and Mary (n.d.). How companies use big data. https://online.mason.wm.edu/blog/how-companies-use-big-data

Reeves, T. C. (2000). Alternative Assessment Approaches for Online Learning Environments in Higher Education. Journal of Educational Computing Research, 23(1), 101–111. https://doi.org/10.2190/GYMQ-78FA-WMTX-J06C

U.S. Department of Education, National Center for Education Statistics, IPEDS, Spring 2019, Fall Enrollment component (provisional data).

Watson, C., Wilson, A., Drew, V., & Thompson, T.L. (2017). Small data, online learning and assessment practices in higher education: a case study of failure? Assessment & Evaluation in Higher Education, 42(7), 1030-1045. https://doi.org/10.1080/02602938.2016.1223834

Williams, P. (2017). Assessing Collaborative Learning: Big Data, Analytics and University Futures. Assessment & Evaluation in Higher Education, 42(6), 978–989.

Yupangco, J. (2017, September 4). The reason you need big data to improve online learning. eLearning Industry. https://elearningindustry.com/big-data-to-improve-online-learning-reason-need

Leave a Reply