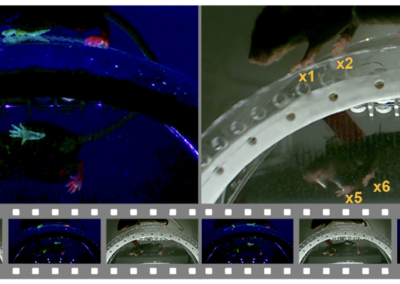

Zexin Chen, Ruihan Zhang and colleagues have developed a tool called AlphaTracker which allows researchers to anaylyze the behavior of multiple animals via video analysis. This tool has been worked on in several labs, including but not limited to Shanghai Jiao Tong University Department of Computer Science, Zhiyuan College, Shanghai Jiao Tong University, and the Massachusetts Institute of Technology. This python-based software tool is available on GitHub. Video analysis tools have been a part of neuroscience research for a while. Many of these analysis tools are capable of tracking the position and performing pose estimation with a fine level of detail. These tools allow for fine movements to be captured and analyzed to inform neurphysiological studies, however, they can be limited to single subject analysis. AlphaTracker was developed to address these issues and advanceprogress on multi-animal tracking. AlphaTracker is a video analysis tool that can examine the movement of multiple animals at once. A camera is required to capture video, but can be of any quality which keeps this tool accessible to most neuroscience labs. The authors even state that the program is tolerant to and successful with low resolution webcams. This program also requires no advanced programming knowledge. Python is required for use, as PyIodine and CanvasJS are used for their code. Also, Linux and GPU’s are used for the algorithm, but can also run off of Google Collaboratory. This program works in 3 steps: tracking, behavioral clustering, and result analysis with custom User-friendly Interface (UI). Tracking involves animal detection, key point estimation and identity tracking across frames. The points used to detect and track the animals are based on the snout, ears, and tail-body junction, as shown below in the image from the article. The algorithm is able to detect the position of each animal within each frame. These individual images are then processed through Squeeze-and-Excitation Networks (SENet)17 which provides key point positions, the reliability of such positions, as well as their x and y coordinates. Mouse behavior is then classified using hierarchical clustering. This hierarchical structuring is important to identify important interactions and make re-organization more intuitive. The user-friendly interface (UI) allows for manual re-organization, inspection, and revision of the clustering and key point estimations. If there is manual reconstruction of key points, for example, the clustering can be recomputed with the new data very efficiently. AlphaTracker is the product of great collaboration between research groups and building on previous open-source projects. This tool allows for researchers to ask research questions without restriction to a single subject, opening up more opportunities for understanding social dynamics and their influence on behavior and neurophysiology. Learn more about AlphaTracker, its implementation, and validation data from the bioRxiv preprint! Get access to the software, docs, and tutorials from the AlphaTracker GitHub! This post was brought to you by Haley Harkins. This project summary is a part of the collection from neuroscience undergraduate and graduate students in the Computational Methods course at American University.AlphaTracker

![]()

Read the Preprint

AlphaTracker GitHub

Thanks, Haley!

Have questions? Send us an email!