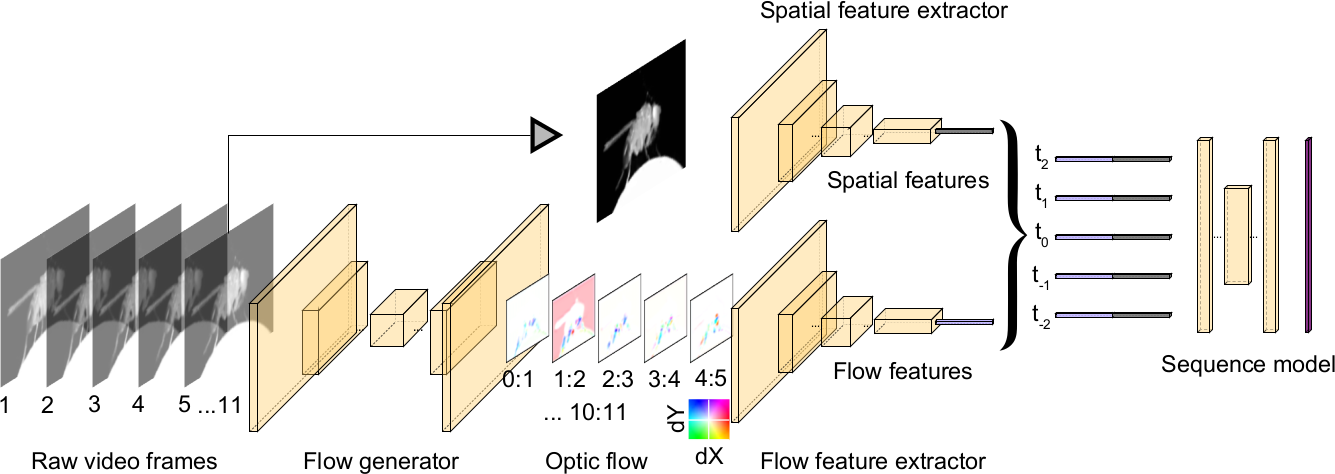

DeepEthogram (DEG) is a recently developed method for temporal action detection in video recordings. It was developed by Jim Bohnslav and colleagues in Chris Harvey’s lab and is described in this preprint. The general idea of DeepEthogram is to extract relevant features from images and use the time series of these features to classify whether a given behavior is present in each frame of a video recording. The method performs “action recognition” (automated behavior classification) over frames. This is distinct from other methods that perform pose estimation (position and orientation). DeepEthogram was developed using Python. The package contains a well-developed Graphical User Interface that allows for labeling behaviors in individual frames. The labels are then used to train in parallel a spatial feature extractor and a temporal flow feature extractor, which independently provide estimates of the probability of each labeled behavior in each frame. The outputs of the two extractors are used to train sequence models that estimate the probability of each behavior over time (across frames). The method has been applied to videos of behaving mice and flies to measure precise onset times of behaviors such as reaching, the time spent by animals in grooming bouts, and transition probabilities between different behaviors, which might be useful for researchers using operant tasks.

Code is available at https://github.com/jbohnslav/deepethogram. Installation via anaconda is straightforward. The deep learning methods depend on PyTorch. The GUI can be run on any computer but, similar to other modern tools for video analysis, the deep learning methods are really only feasible with an NVidia GPU present. A Colab notebook is available for running the deep learning bits on Colab servers.

This research tool was created by your colleagues. Please acknowledge the Principal Investigator, cite the article in which the tool was described, and include an RRID in the Materials and Methods of your future publications. RRID:SCR_022355