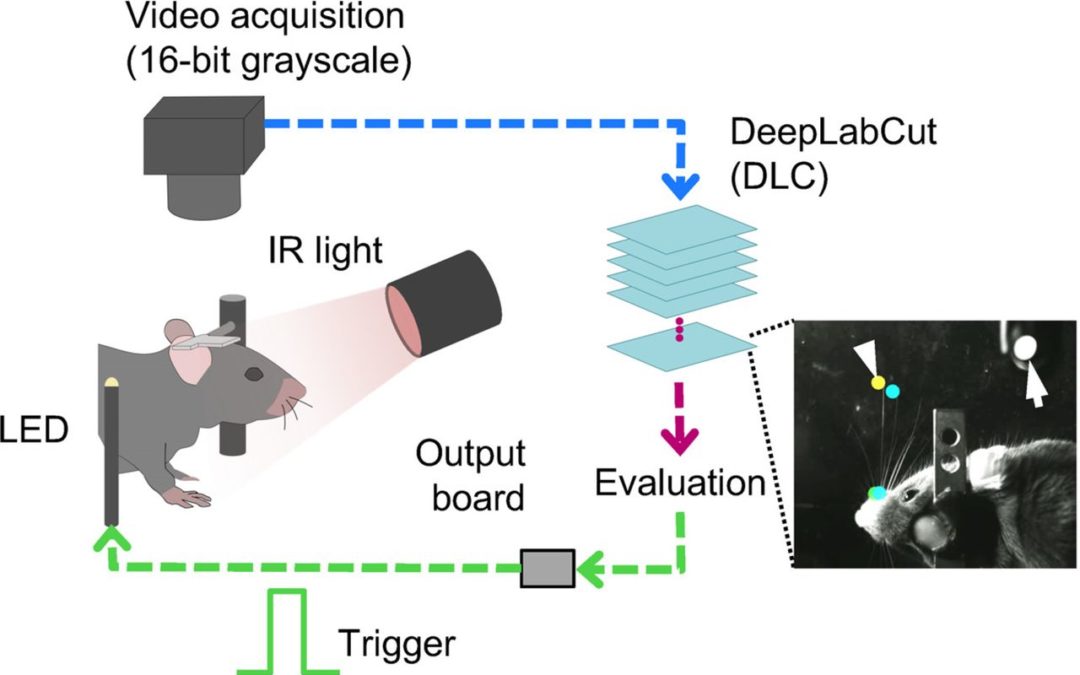

Computer vision and deep-learning approaches have provided quantitative measurements of animal pose estimation and behavior tracking. Specifically, DeepLabCut and other deep-neural networks have introduced marker-less approaches for offline pose estimation and tracking of nearly any body part across behavioral sessions. Triggers can be generated based on body part positions that are relevant for inferring neural activity. Furthermore, these systems are generally low-cost, easy to use, and easy to train. Keisuke Sehara and colleagues share an offline DeepLabCut implementation used to track movement and generate triggers in real-time, as reported in a recent paper at eNeuro: https://www.eneuro.org/content/8/2/ENEURO.0415-20.2021

The team shares their DeepLabCut implementation for a rodent whisker system. A deep neural network was trained with high-speed video data of a mouse whisking. The trained network was then used with the same mouse whisking in real-time. The tracked position of the tips of whiskers was converted to TTL output within behavioral time scales. Their system was capable of closed-loop trigger generation in real-time at a latency of 10.5-ms. Keisuke Sehara and colleagues’ system implements a posture-evaluation mechanism that can be modified for almost any real-time behavioral neurophysiology application. This closed-loop system approach can be used to directly manipulate the relationship between movement and neural activity. Additional code repositories are available in Zenodo.

https://zenodo.org/record/4459345#.YO2fnHVKiUk