Apr 25, 2024 | Community Call to Action, In the Community, Open Source Resource, Resources, Uncategorized

We recently launched a repository of 3D printed tools for neuroscience research (in August 2022) and started a new GitHub repository to make the designs available in a single repository last month. Thirty five designs are now available in the repository, which you can...

May 4, 2023 | In the Community, Open Source Resource, OpenBehavior Team Update, Uncategorized

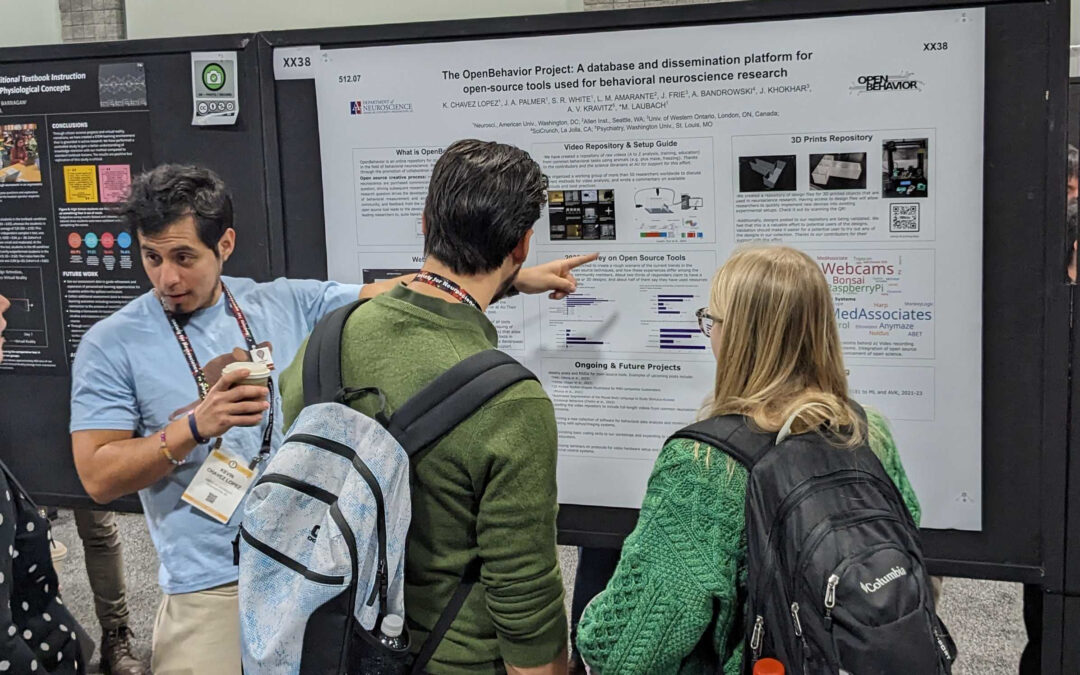

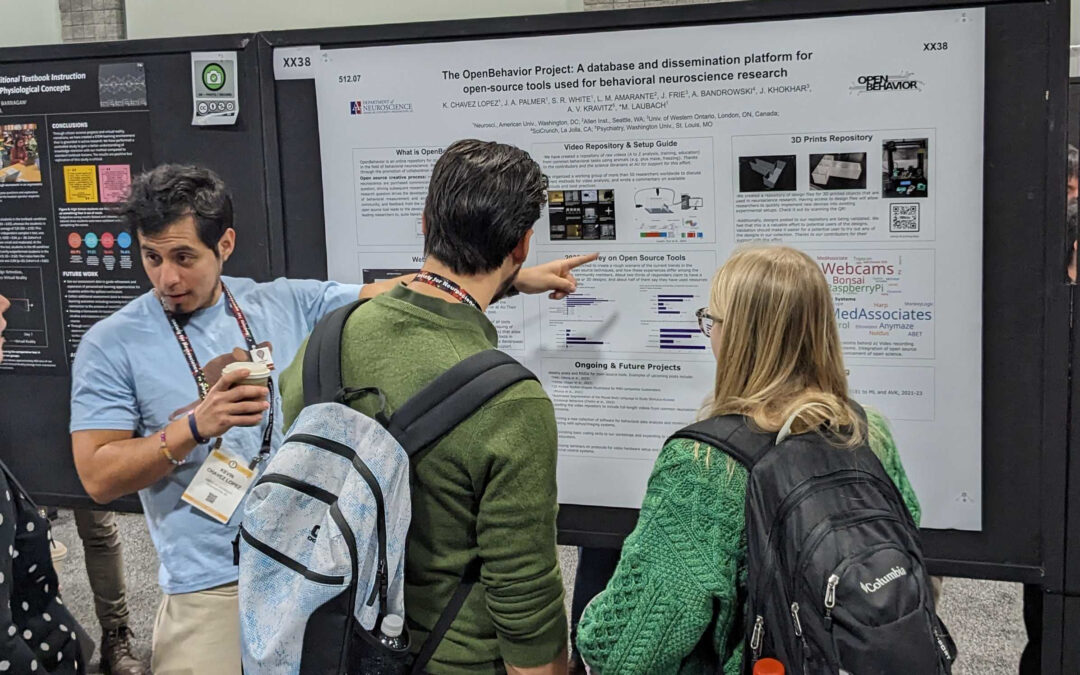

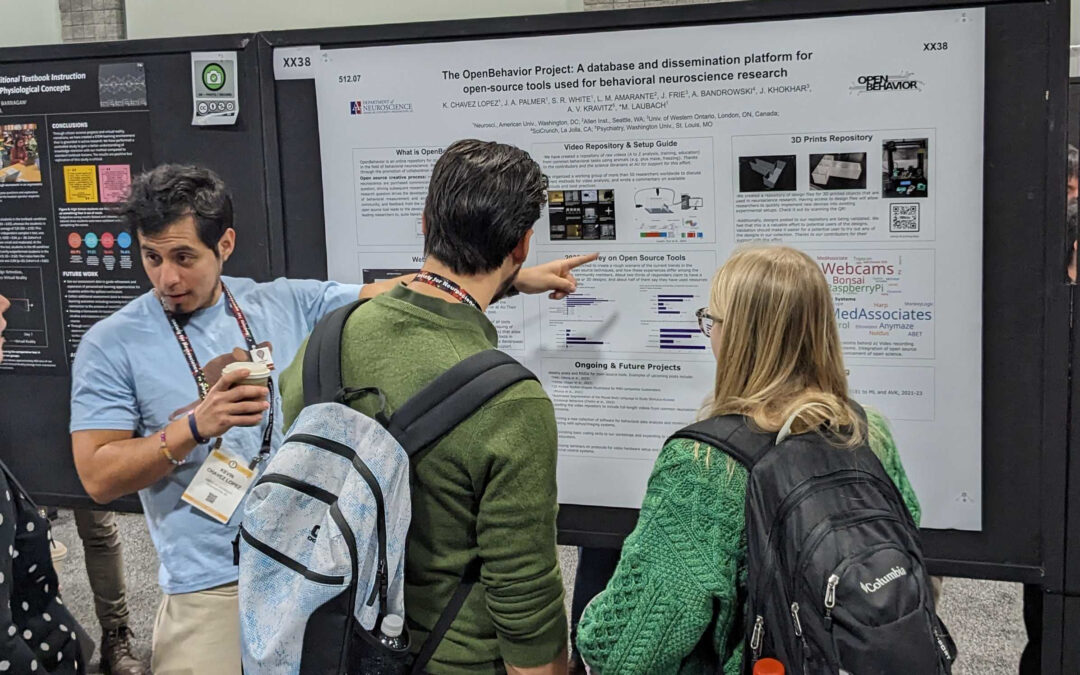

The OpenBehavior project is organizing a workshop on open source tools for neuroscience research immediately prior to the 2023 meeting of the Society for Neuroscience. The workshop will be held on November 10-11 2023 at American University in Washington, DC, USA. It...

Aug 5, 2021 | In the Community, Open Source Resource, OpenBehavior Team Update, Uncategorized

The RRID Initiative by OpenBehavior and SciCrunch The OpenBehavior project received support from the National Science Foundation in January 2021. There are three main goals for the initial funding period: (1) create a database of open-source tools used in behavioral...

Jul 14, 2021 | Open Source Resource, Uncategorized

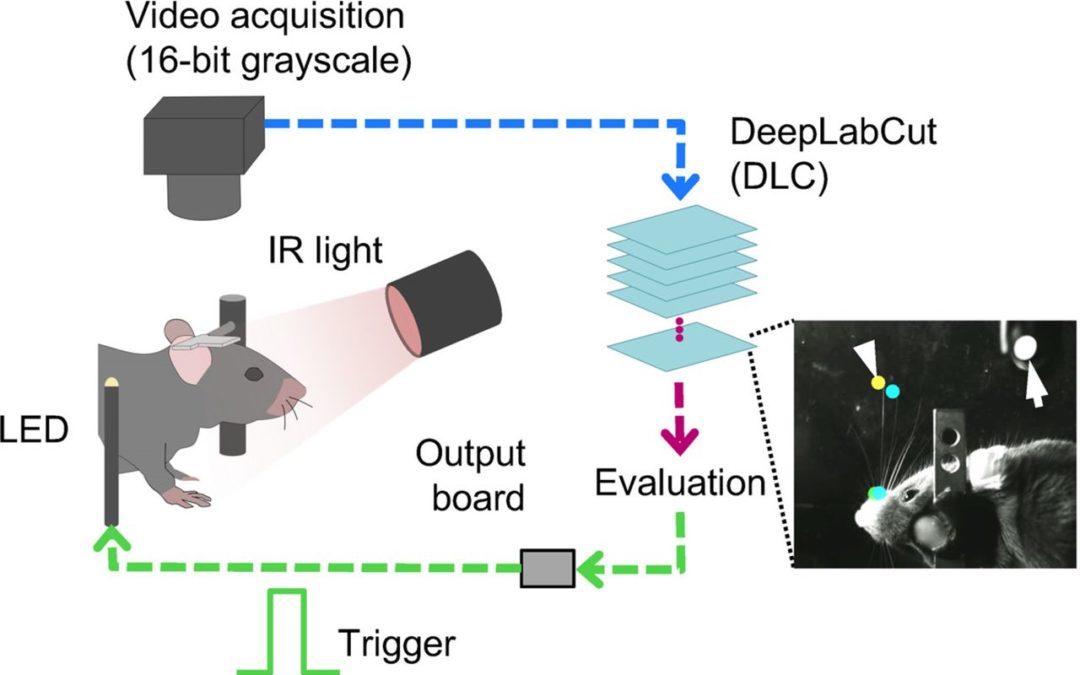

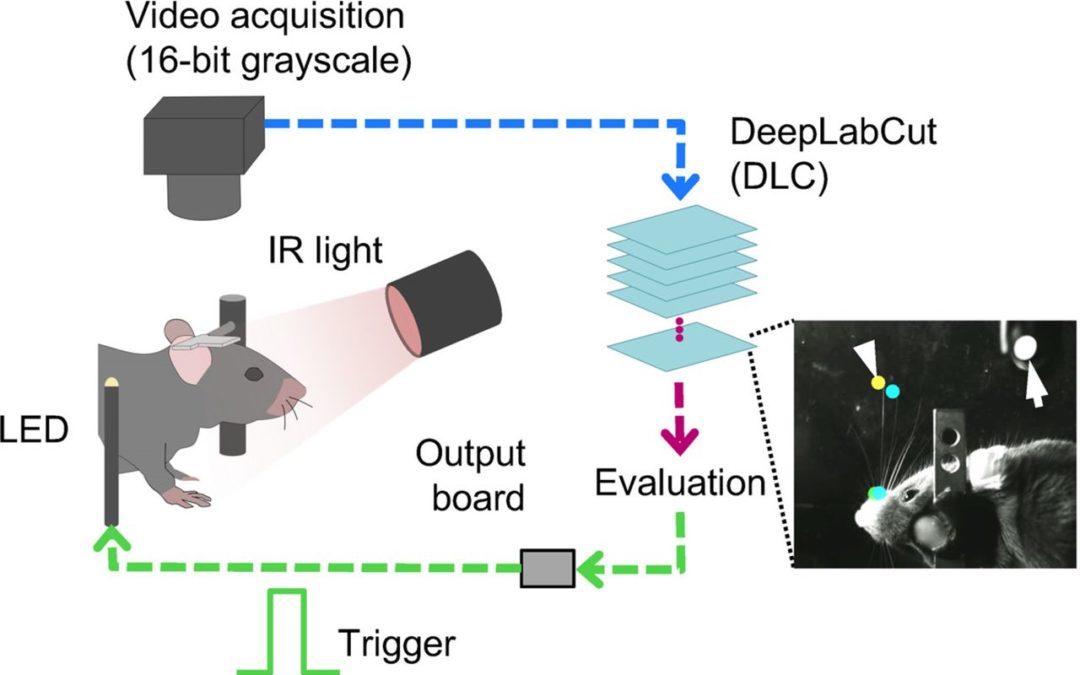

Computer vision and deep-learning approaches have provided quantitative measurements of animal pose estimation and behavior tracking. Specifically, DeepLabCut and other deep-neural networks have introduced marker-less approaches for offline pose estimation and...

Recent Comments