In a recent article, Jennifer Tegtmeier and colleagues have shared CAVE: an open-source tool in MATLAB for combined analysis of head-mounted calcium imaging and behavior.

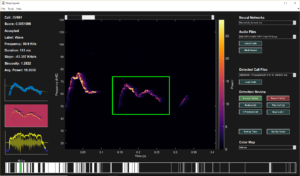

Calcium imaging is spreading through the neuroscience field like melted butter on hot toast. Like other imaging techniques, the data collected with calcium imaging is large and complex. CAVE (Calcium ActiVity Explorer) aims to analyze imaging data from head-mounted microscopes simultaneously with behavioral data. Tegtmeier et al. developed this software in MATLAB with a bundle of unique algorithms to specifically analyze single-photon imaging data, which can then be correlated to behavioral data. A streamlined workflow is available for novice users, with more advanced options available for advanced users. The code is available for download from GitHub.

Read more from Frontiers in Neuroscience, or check it out directly from GitHub.