August 22, 2018

Dr. Mackenzie Mathis, Principal Investigator of the Adaptive Motor Control Lab (Rowland Institute at Harvard University), has shared the following responses to a short Q&A about the inspiration behind, development of and sharing of DeepLabCut — a toolbox for animal tracking using deep-learning.

What inspired you and your colleagues to create this toolbox as opposed to using previously developed commercial software?

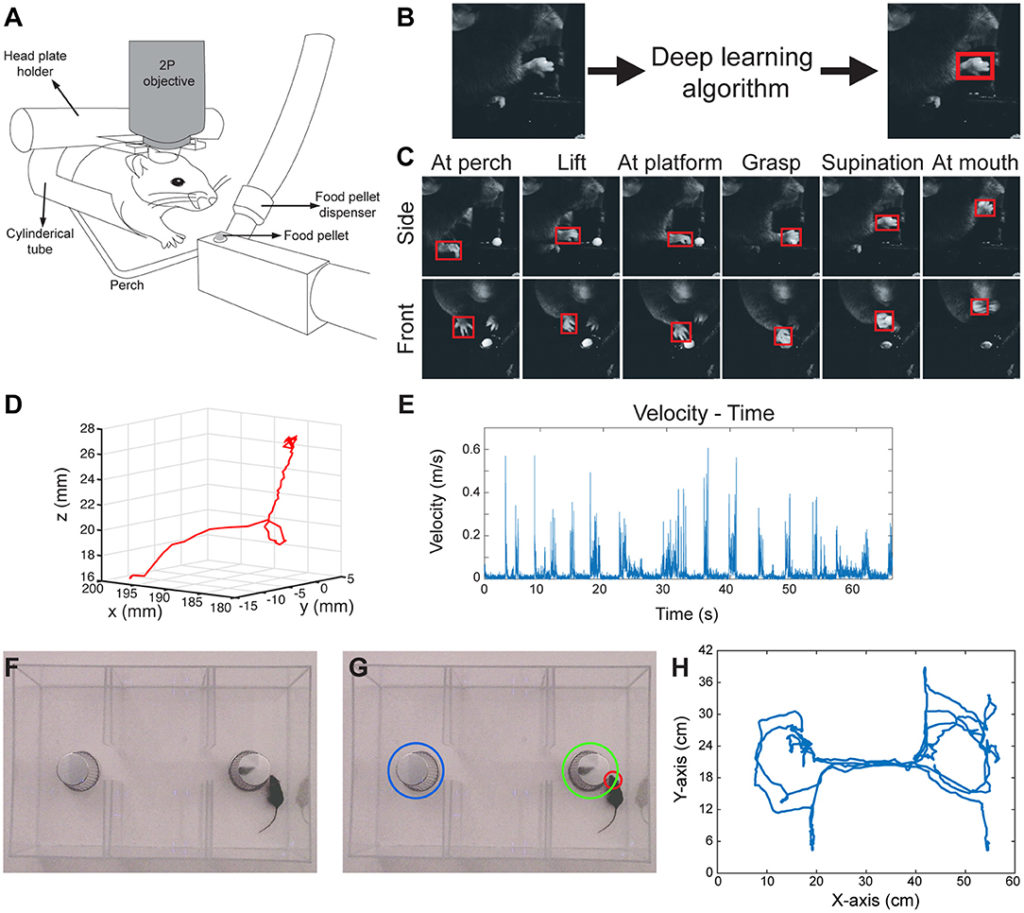

Alexander Mathis and I both worked on behaviors where we wanted to track particular features, and they proved to be unreliably tracked with the methods we tried. Specifically, Alexander has an odor-guided navigation task that he works on in the lab of Prof. Venkatesh Murthy at Harvard, where the mice are placed in a very large “endless” paper trail and he inkjet prints odors for them to follow to get rewards (chocolate milk). The position of the snout is very important to measure accurately, so background subtraction or other heuristics didn’t work when the nose crossed the trail and when the droplet was right in front of the snout. I worked on a skilled joystick behavior for mice, and I wanted to track joints accurately and non-invasively – a challenging problem for little hands. So, we teamed up with Prof. Matthias Bethge at the University of Tuebingen, to work on a new approach. He suggested we start looking into the rapidly advancing human pose estimation literature, and we looked at several before deciding to seriously benchmark DeeperCut, a top performing algorithm in the large MPII dataset. Those authors did something very clever, namely, they used a deep neural network (ResNet) that was pre-trained on a large image set called ImageNet. This gives the ResNet a chance to learn natural scene statistics first. Remarkably, we found that we could use only a few frames to very accurately track the snout in the odor-guided navigation task, so we next tried videos from my joystick task, and to flex DeepLabCut’s muscles, we teamed up with Kevin Cury (who, like myself was an alumni of Prof. Nao Uchida’s group) to track fruit flies in the 3D chamber. After all this benchmarking, we built a toolbox that implements a complete pipeline to extract and label frames, train and evaluate the deep neural nets, as well as analyze new experimental videos. We call this toolbox DeepLabCut, as a nod to DeeperCut.

What was the motivation for immediately sharing your work as an open source tool, thus making it accessible to the broader neuroscience community?

Some of the options we first tried to track with were very expensive commercial systems, and they failed quite badly. On the other hand, deep learning has revolutionized computer vision in the last few years, so we were eager to try some new approaches to solve the problem. So, in addition to being advocates of open science, we really wanted to make a toolbox that someone with minimal to no coding experience could, absolutely for free, track whatever they wanted.

We also know peer review can be slow, so as soon as we had the toolbox in place, we wrote up the arxiv paper and released the code base immediately. Honestly, it has been one of my most rewarding papers – the feedback from our peers, and seeing what people have used the code for, has been a very rewarding experience. This was my first preprint, and especially for methods manuscripts, I now cannot imagine another way to share our future work too.

How do you think open source tools, such as yours, will continue to impact the progress of scientific research?

Open source code and preprints have been the norm in some fields for decades (such as math and physics), and I am really excited to see it come of age in biology and neuroscience. I am excited to see how tools will continue to improve as the community gets behind them, just as we could build on DeeperCut, which was open source. Also, at least in my experience, many individuals write their own code, which leads to a lot of duplicated efforts. Moreover, datasets are becoming increasingly more complicated and code to work with such data need to be robust shared. My expectation is that open source code will become the norm in the future, which can only help science become more robust.

Even before formal publication this week (see Nature Neuroscience), we estimate that about 100 labs are actively using DeepLabCut, so releasing the code before publication, we hope, has really allowed for rapid progress to be made. We were also very happy that The Atlantic could highlight some of the early adopters, as it’s one thing to say you made something, but it’s another to hear others saying it is actually ‘something.’

DeepLabCut provides an efficient method for markerless pose estimation based on transfer learning with deep neural networks that achieves excellent results with minimal training data. Read more on the website, or in Nature Neuroscience.

Mathis, A., Mamidanna, P., Cury, K. M., Abe, T., Murthy, V. N., Mathis, M. W., & Bethge, M. (2018). DeepLabCut: Markerless pose estimation of user-defined body parts with deep learning. Nature Neuroscience. doi:10.1038/s41593-018-0209-y