June 20, 2019

Ahmet Arac from Peyman Golshani’s lab at UCLA recently developed DeepBehavior, a deep-learning toolbox with post processing methods for video analysis of behavior:

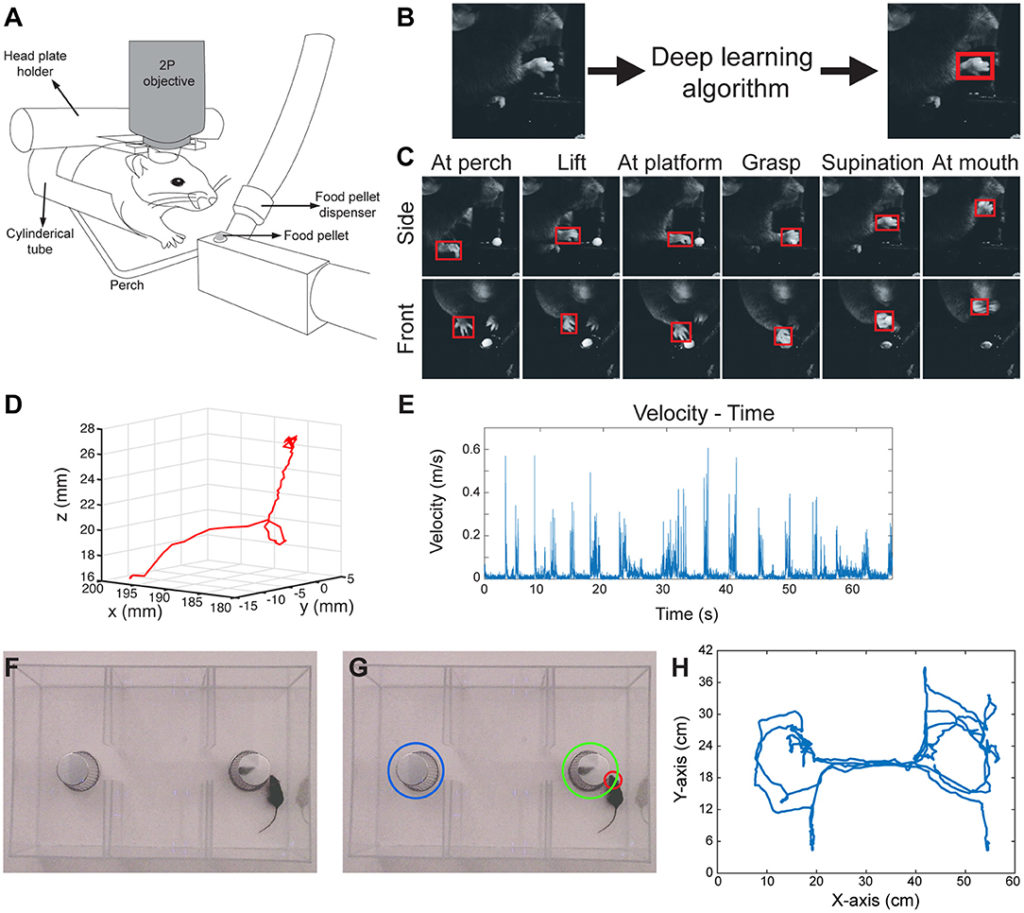

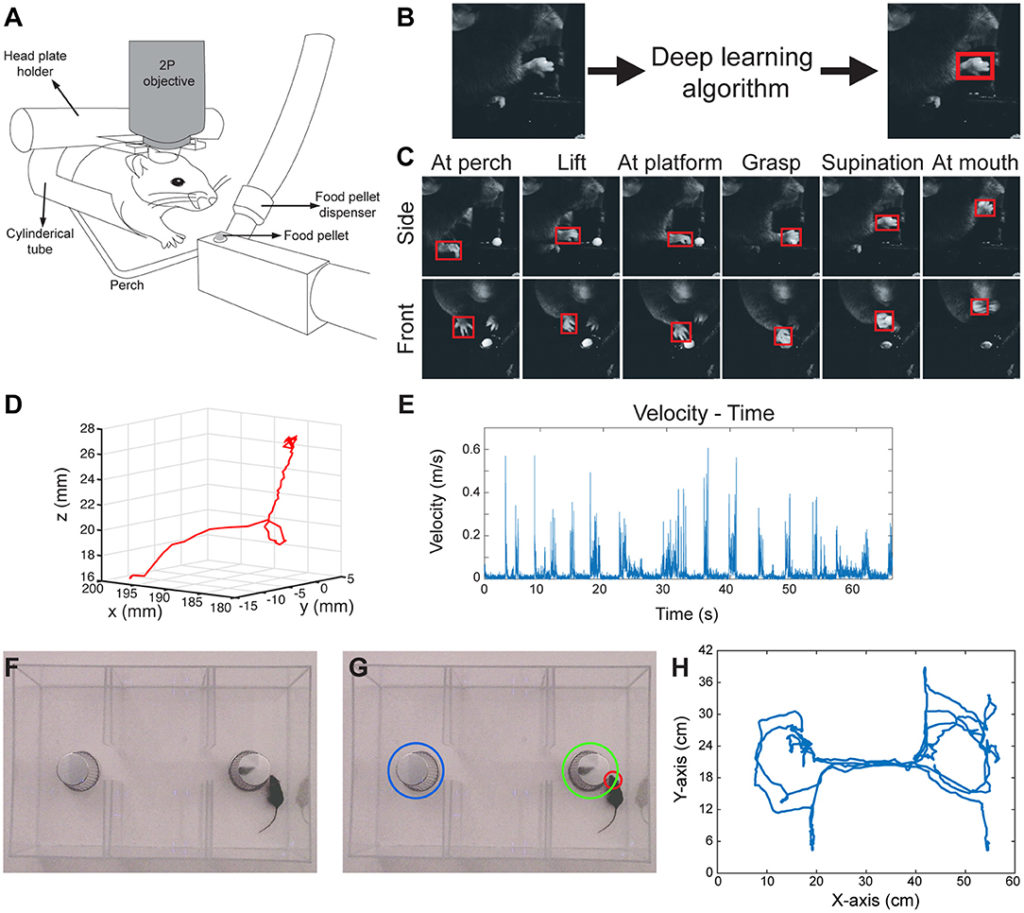

Recently, there has been a major push for more fine-grained and detailed behavioral analysis in the field of neuroscience. While there are methods for taking high-speed quality video to track behavior, the data still needs to be processed and analyzed. DeepBehavior is a deep learning toolbox that automates this process, as its main purpose is to analyze and track behavior in rodents and humans.

The authors provide three different convolutional neural network models (TensorBox, YOLOv3, and OpenPose) which were chosen for their ease of use, and the user can decide which model to implement based on what experiment or what kind of data they aim to collect and analyze. The article provides methods and tips on how to train neural networks with this type of data, and gives methods for post-processing of image data.

In the manuscript, the authors give examples of utilizing DeepBehavior in five behavioral tasks in both animals and humans. For rodents, they use a food pellet reaching task, a three-chamber test, and social interaction of two mice. In humans, they use a reaching task and a supination / pronation task. They provide 3D kinematic analysis in all tasks, and show that the transfer learning approach accelerates network training when images from the behavior videos are used. A major benefit of this tool is that it can be modified and generalized across behaviors, tasks, and species. Additionally, DeepBehavior uses several different neural network architectures, and uniquely provides post-processing methods for 3D kinematic analysis, which separates it from previously published toolboxes for video behavioral analysis. Finally, the authors emphasize the potential for using this toolbox in a clinical setting with analyzing human motor function.

For more details, take a look at their project’s Github.

All three models used in the paper also have their own Github: TensorBox, YOLOv3, and openpose.

Arac, A., Zhao, P., Dobkin, B. H., Carmichael, S. T., & Golshani, P. (2019). DeepBehavior: A deep learning toolbox for automated analysis of animal and human behavior imaging data. Frontiers in systems neuroscience, 13.