June 6, 2019

Richard Pinnell from Ulrich Hofmann’s lab has three publications centered around open-source and 3D printed methods for headstage implant protection and portable / waterproof DBS and EEG to pair with water maze activity. We share details on the three studies below:

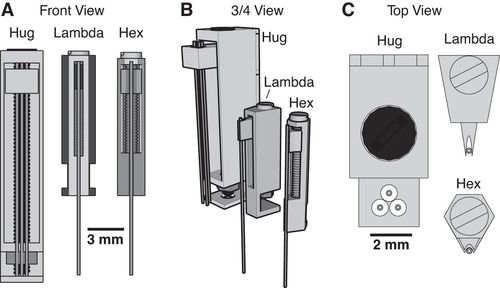

Most researchers opt to single-house rodents after rodents have undergone surgery. This helps the wound heal and prevent any issues with damage to the implant. However, there is substantial benefits to socially-housing rodents, as social isolation can create stressors for them. As a way to continue to socially-house rats, Pinnell et al. (2016a) created a novel 3D-printed headstage socket to surround an electrode connector. Rats were able to successfully be pair housed with these implants and their protective caps.

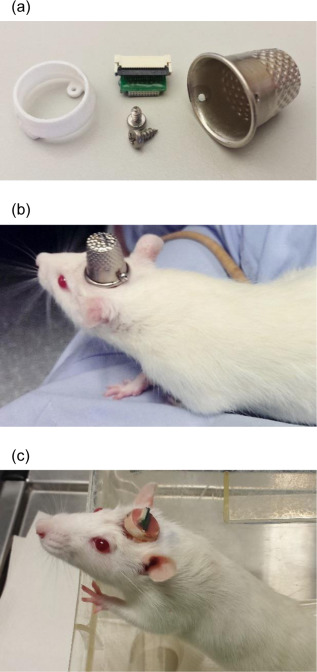

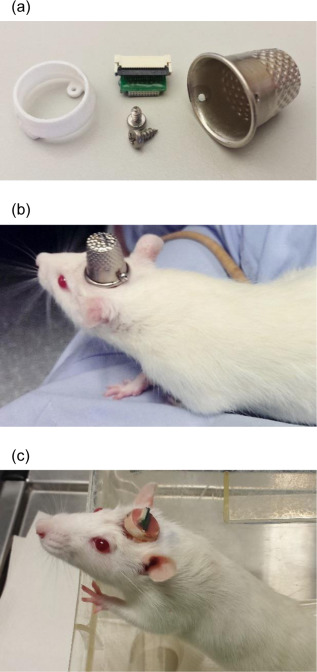

The polyamide headcap socket itself is 3D printed, and a stainless steel thimble can be screwed into it. The thimble can be removed by being unscrewed to reveal the electrode connector. This implant allows both for increased well-being of the rodent post-surgery, but also has additional benefits in that it can prevent any damage to the electrode implant during experiments and keeps the electrode implant clean as well.

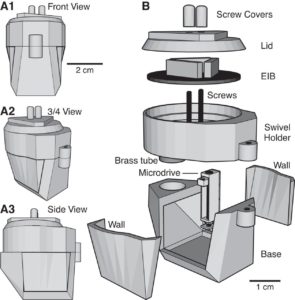

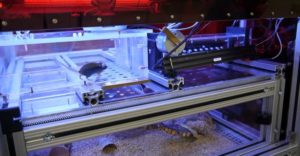

The 3D printed headcap was used in a second study (Pinnell et al., 2016b) for wireless EEG recording in rats during a water maze task. The headstage socket housed the PCB electrode connector and the waterproof wireless system was attached. In this setup, during normal housing conditions, this waterproof attachment was replaced with a standard 18×9 mm stainless-steel sewing thimble, which contained 1.2 mm holes drilled at either end for attachment to the headstage socket. A PCB connector was manufactured to fit inside the socket, and contains an 18-pin zif connector, two DIP connectors, and an 18-pin Omnetics electrode connector for providing an interface between the implanted electrodes and the wireless recording system.

Finally, the implant was utilized in a third study (Pinnell et al., 2018) where the same group created a miniaturized, programmable deep-brain stimulator for use in a water maze. A portable deep brain stimulation (DBS) device was created through using a PCB design, and this was paired with the 3D printed device. The 3D printed headcap was modified from its use in Pinnell et al., 2016a to completely cover the implant and protect the PCB. The device, its battery, and housing weighs 2.7 g, and offers protection from both the environment and from other rats, and can be used in DBS studies during behavior in a water maze.

The portable stimulator, 3D printed cap .stl files, and more files from the publications can be found on https://figshare.com/s/31122e0263c47fa5dabd.

Pinnell, R. C., Almajidy, R. K., & Hofmann, U. G. (2016a). Versatile 3D-printed headstage implant for group housing of rodents. Journal of neuroscience methods, 257, 134-138.

Pinnell, R. C., Almajidy, R. K., Kirch, R. D., Cassel, J. C., & Hofmann, U. G. (2016b). A wireless EEG recording method for rat use inside the water maze. PloS one, 11(2), e0147730.

Pinnell, R. C., Pereira de Vasconcelos, A., Cassel, J. C., & Hofmann, U. G. (2018). A miniaturized, programmable deep-brain stimulator for group-housing and water maze use. Frontiers in neuroscience, 12, 231.