OCTOBER 3, 2019

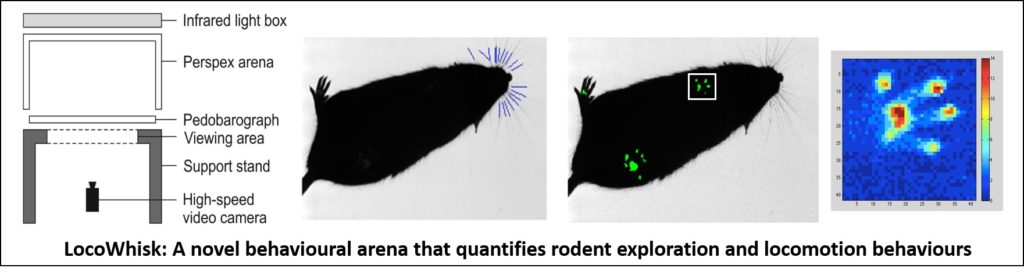

Dr Robyn Grant from Manchester Metropolitan University in Manchester, UK has shared her group’s most recent project called LocoWhisk, which is a hardware and software solution for measuring rodent exploratory, sensory and motor behaviours:

In describing the project, Dr Grant writes, “Previous studies from our lab have shown that that analysing whisker movements and locomotion allows us to quantify the behavioural consequences of sensory, motor and cognitive deficits in rodents. Independent whisker and feet trackers existed but there was no fully-automated, open-source software and hardware solution, that could measure both whisker movements and gait.

We developed the LocoWhisk arena and new accompanying software, that allows the automatic detection and measurement of both whisker and gait information from high-speed video footage. The arena can easily be made from low-cost materials; it is portable and incorporates both gait analysis (using a pedobarograph) and whisker movements (using high-speed video camera and infrared light source).

The software, ARTv2 is freely available and open source. ARTv2 is also fully-automated and has been developed from our previous ART software (Automated Rodent Tracker).

ARTv2 contains new whisker and foot detector algorithms. On high-speed video footage of freely moving small mammals (including rat, mouse and opossum), we have found that ARTv2 is comparable in accuracy, and in some cases significantly better, than readily available software and manual trackers.

The LocoWhisk system enables the collection of quantitative data from whisker movements and locomotion in freely behaving rodents. The software automatically records both whisker and gait information and provides added statistical tools to analyse the data. We hope the LocoWhisk system and software will serve as a solid foundation from which to support future research in whisker and gait analysis.”

For more details on the ARTv2 software, check out the github page here.

Check out the paper that describes LocoWhisk and ARTv2, which has recently been published in the Journal of Neuroscience Methods.

LocoWhisk was initially shared and developed through the NC3Rs CRACK IT website here.