Lightning Pose: a semi-supervised animal pose estimation algorithm, Bayesian post processing approach and deep learning package

Dan Biderman and colleagues from Columbia University posted their open-source improved pose estimation package to bioRxiv and GitHub. Links are provided below.

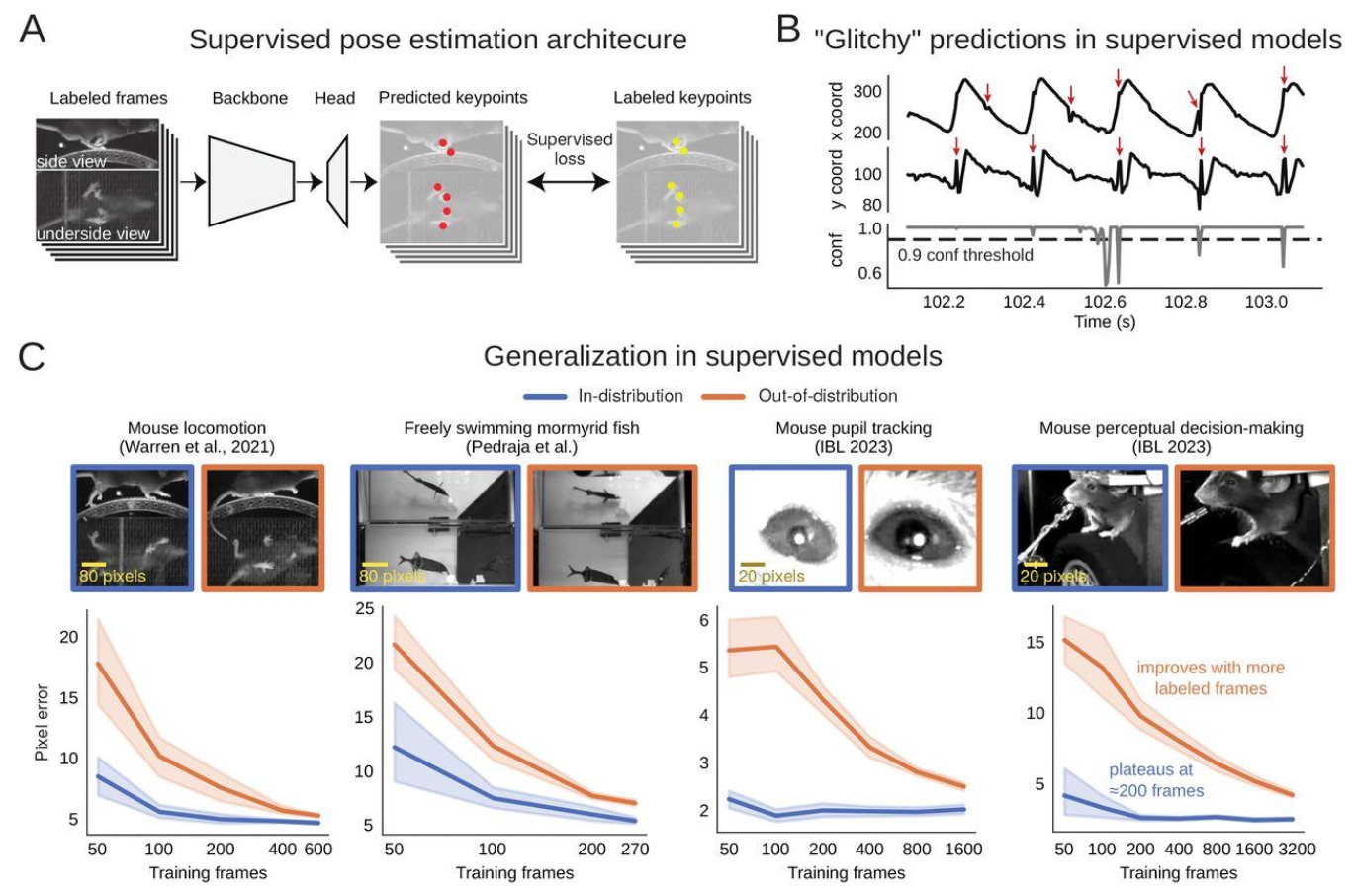

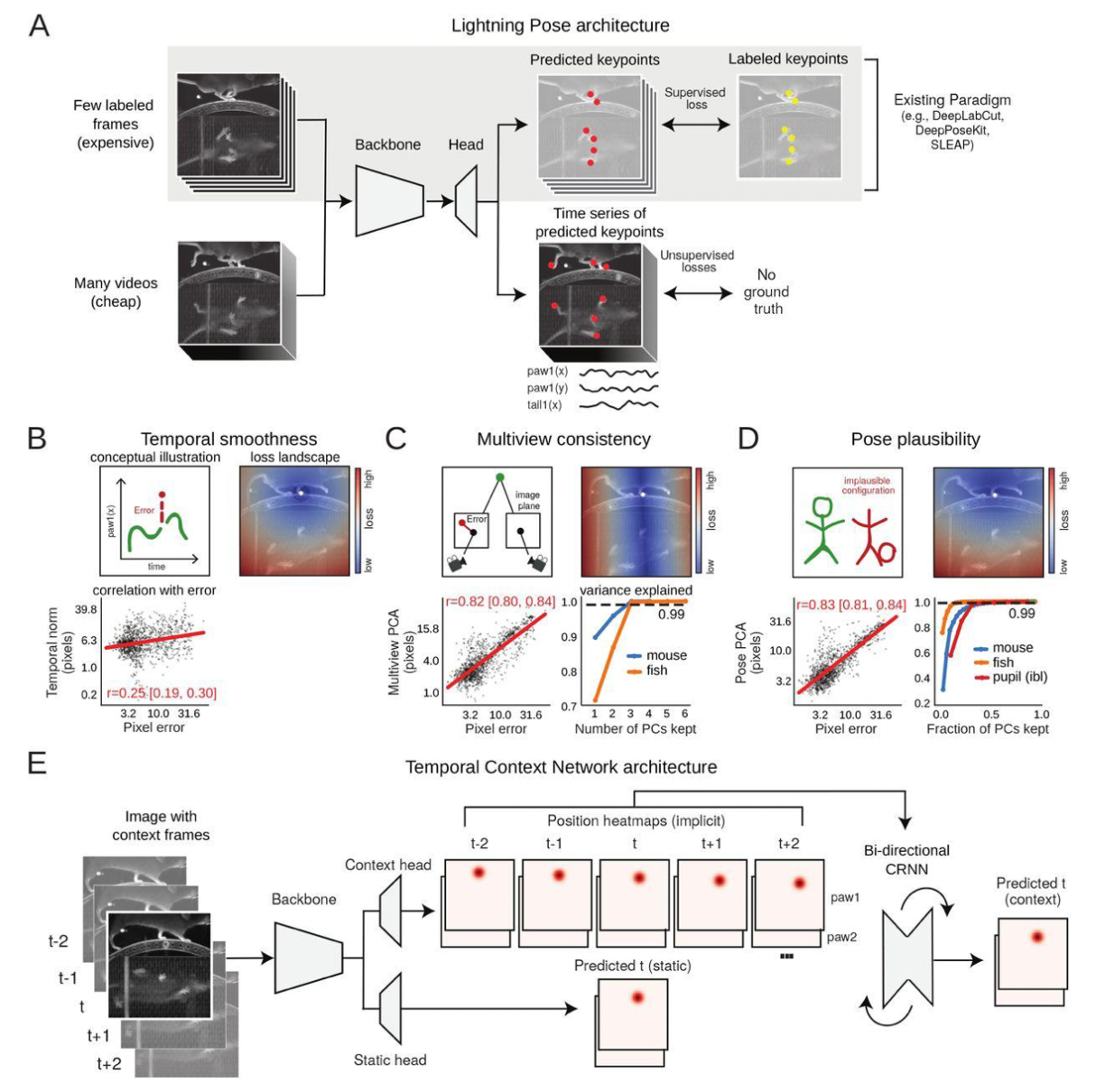

Lightning Pose utilizes a semi-supervised approach to improve pose estimation robustness at three levels: modeling, software, and a cloud based application.

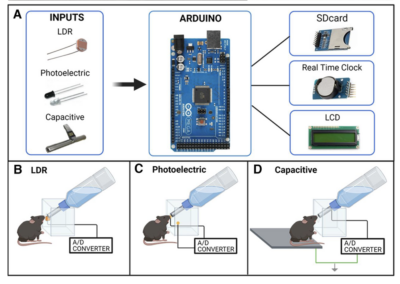

The estimation framework consists of two components: training objectives and a network architecture that predicts pose for a given frame using temporal context from unlabeled frames. The networks are trained on both supervised and unsupervised frames for fast predictions and the temporal context network architecture helps resolve anatomical ambiguities.

The Bayesian post processing approach further improves tracking predictions. It aggregates the predictions of multiple networks and models those predictions with a spatially constrained Kalman smoother that accounts for collective uncertainty.

The deep learning package kit is a cloud-hosted application and supports rapid model prototyping. The cloud application runs on any web browser to perform an entire cycle of pose estimation, including uploading raw videos to cloud, frame annotation, parallel network training, and reliability diagnosing. Then, trained models can be deployed to quickly predict behavior. You can access the cloud hosted application, PoseApp, here.

This research tool was created by your colleagues. Please acknowledge the Principal Investigator, cite the article in which the tool was described, and include an RRID in the Materials and Methods of your future publications. RRID: SCR_024480

Special thanks to Lan Hooton, a neuroscience undergraduate at American University, for providing this project summary.

Access the code from GitHub!

Check out the Lightning Pose repository on GitHub.

Read more about it!

Check out projects similar to this!